AI-enabled Acoustic Intelligence for Anti-Submarine Warfare

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The past few months have shown us how quickly deep tech innovation, and especially advances in Artificial Intelligence (AI), can be developed and adopted at great scale. New AI tools have emerged at breakneck speed, opening up a range of opportunities to positively impact problem-solving, decision-making, and efficiency.

One of the most prolific to date, ChatGPT, has shown just how quickly advanced technology can arrive in the mainstream and capture the imaginations of experts and laypeople alike. This tool is just the latest step in a long and continuing line of advances in using AI to generate text. Its capabilities, which span everything from explaining quantum physics to writing poems, have sparked debates about the opportunities it could create and the impact it could have on the future of work.

Automation, Creativity, and the Future of Work

How susceptible are current jobs to these technological developments? Ten years ago Mind Foundry co-founder and University of Oxford Professor Michael Osborne famously co-authored a paper with Carl Benedikt Frey to answer these very questions. Their conclusion that 47% of total US employment was potentially at risk also noted that the most creative jobs were among the safest group. Though there had been some notable examples of AI writing stories, creating art, composing music, or telling jokes… None of it was very good. Quite frankly, the jokes were bad.

In 2013, creativity was still for the humans.

Then, as 2022 came to a close, we saw a tipping point. Models like Stable Diffusion and ChatGPT gained world-wide attention for demonstrating an incredible capacity for generating images and text that were actually quite good. It begs the question: are humans still required for the creative tasks required by many of the jobs in our modern economies?

Generating text is at the heart of many jobs, so large language models are a broadly applicable tool for the automation of work. But that doesn’t mean that humans are now not required for creative tasks. Relative to humans, large language models have limited reliability, limited understanding, and limited range - they certainly can’t write coherent and interesting novels - and hence need human supervision. At least for now, they will be primarily used as a tool by human creators, rather than as a complete replacement for human work.

The true masters of their craft will be the ones who know which AI tools are available, how to use them together with other tools, and when to put them down and just be human. The question, then, isn’t “Will an AI take my job?” but rather, “Will a human effectively collaborating with AI take my job?”

(We'll have to wait and see, but the answer is probably yes.)

Transformative Potential

AI is no stranger to being challenged about the risks it presents in society. From facial recognition to content creation, AI-driven applications have the potential to affect people’s lives both at individual and population scale.

In fact, AI's potential impact is so great that Professor Osborne, when presenting to the UK Government’s Science and Technology Select Committee (on the 25th of January 2022) compared it to that of nuclear weapons. That is to say – in the wrong hands and by scaling the wrong decisions, AI could have a profoundly negative impact on society. A similar sentiment was echoed a week later by ex-Google CEO, Eric Schmidt and this trend of likening nuclear weapons to the power of AI is starting to take the global media by storm.

But as media outlets increase their ad revenue by propagating scary Terminator-esque images of total annihilation, the more important story about the Responsible use of AI, and how it can solve some of the world’s biggest problems, is often getting missed.

The Responsibility of AI

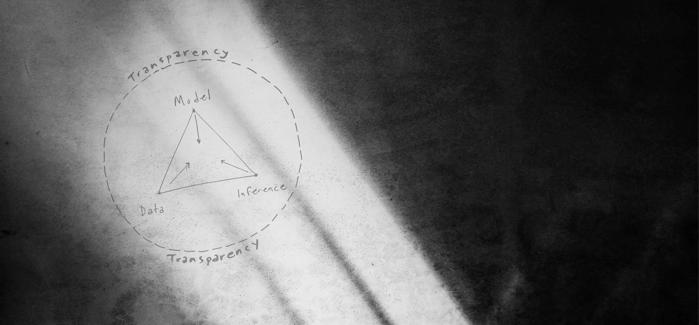

So, how do we go about ensuring that AI is adopted effectively and responsibly? To begin with, there is no one-size-fits-all AI system to suit all applications. Context is always going to be paramount. In our experience working with customers in high-stakes applications, we’ve found three considerations in particular that are so useful they now underpin, and serve as a foundation, for all of our decision-making around AI.

Firstly, your AI needs to be transparent from the outset, and this transparency needs to cover the whole system, including the model, the data, and the inference. If any one of those three things is obscured, then a critical window of understanding is closed off, and the chance of a deployed model functioning irresponsibly, increases. To overcome this, we can, and should, build models which directly give richer insight because the structure of the model itself is amenable to more open human interpretation and understanding.

Secondly, your AI needs to not only be intelligent, it must be wise. Bluntly put, and with a slight nod to Socrates, intelligence is knowing a lot of things, but wisdom is knowing how many things you don’t really know. This is a sort of humility, and in the case of AI and Machine Learning, this means we need to build humble algorithms that understand their limitations.

Sometimes the right decision for a model is to realise that it can't handle the exogenous shocks or extreme anonymous situations it is encountering. In those cases, it needs to be able to honestly say, “ I can't do this.” Because when the risk is too high, often the best decision is to not make a decision at all, but pass it over to a human expert. This is at the core of the best kinds of Human - AI collaboration.

Thirdly, your AI needs to be constantly introspective and learning. At every point in its lifecycle it needs to maintain an understanding of how healthy it is in terms of its ability to analyse and produce accurate outcomes for a given data set. AI is not a set and forget technology. As the world changes, it needs to be able to evolve and change with it.

The Role of Regulations

Beyond these three principles, effective regulations, such as the ones currently being developed by the UK government, as well as many governments around the world, will also play a big role in mitigating the risks associated with AI. We say effective, because if the resultant regulations end up being defined arbitrarily, filled with too many exceptions for special actors (or governments), or administered by regulators who don’t fully understand the technologies they’re charged with regulating, they could end up producing the opposite result than that which is intended.

But it’s generally better to have some regulation than ambiguity, and one of the best things about regulations is that they almost always make people think very carefully and scrutinise the way in which they’re going to use advanced algorithms. This is a key component of responsible AI because when we think about AI going wrong, the problem is rarely with the algorithm itself, it's more often with the human decisions that go around its use and the retrospective use of algorithms in order to provide some kind of chain of reasoning to allow an agent to justify a poor decision. Hopefully, effective regulation, paired with AI built upon responsible principles, will allow AI to flourish and empower human decision-making for decades to come.

At a time when technological advancements move at a breakneck speed, it’s worth taking a moment to reflect on the successes of the past. Facing big problems is nothing new. We’ve done this before. We’ve succeeded. We’ve managed to live with the existence of nuclear weapons since 1938 without the doomsday clock ticking midnight. As Osborne and Schmidt point out, AI poses a similar risk, but equally, a once-in-a-lifetime opportunity to drastically change the way we live, work, and think. The problems facing the world today are vast, but when the stakes are this high, we must not sit idly by. We must act. But when we act, we must do so decisively, and we cannot get it wrong.

Enjoyed this blog? Check out 'Explaining AI Explainability'.

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...

Industrial AI is increasingly coming to the fore in physical industries, but achieving measurable real-world impact requires careful consideration...