AI-enabled Acoustic Intelligence for Anti-Submarine Warfare

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

2 min read

Mind Foundry

:

Updated on April 9, 2024

We’re delighted to announce that we won a prestigious CogX award in the “Best Innovation in Explainable AI” category last night.

The CogX awards recognise innovators and change-makers looking to make a real impact on the world around us with technology. At the CogX Awards 2022 Gala event last night, we accompanied our fellow nominees on a great evening celebrating groundbreaking innovations and the incredible minds behind them.

Winning any award from CogX - one of the longest-running and best-regarded AI-focused event organisations in the business world - would be an honour. But, it’s gratifying to win this particular category. First Principles Transparency and explainability are fundamental to our approach to AI.

AI has long been marketed as something too complex for humans to understand. Mind Foundry is changing this mindset and developing AI solutions for high-stakes applications that everyone can understand and engage with regardless of their technical knowledge.

Our Approach to Explainable AI

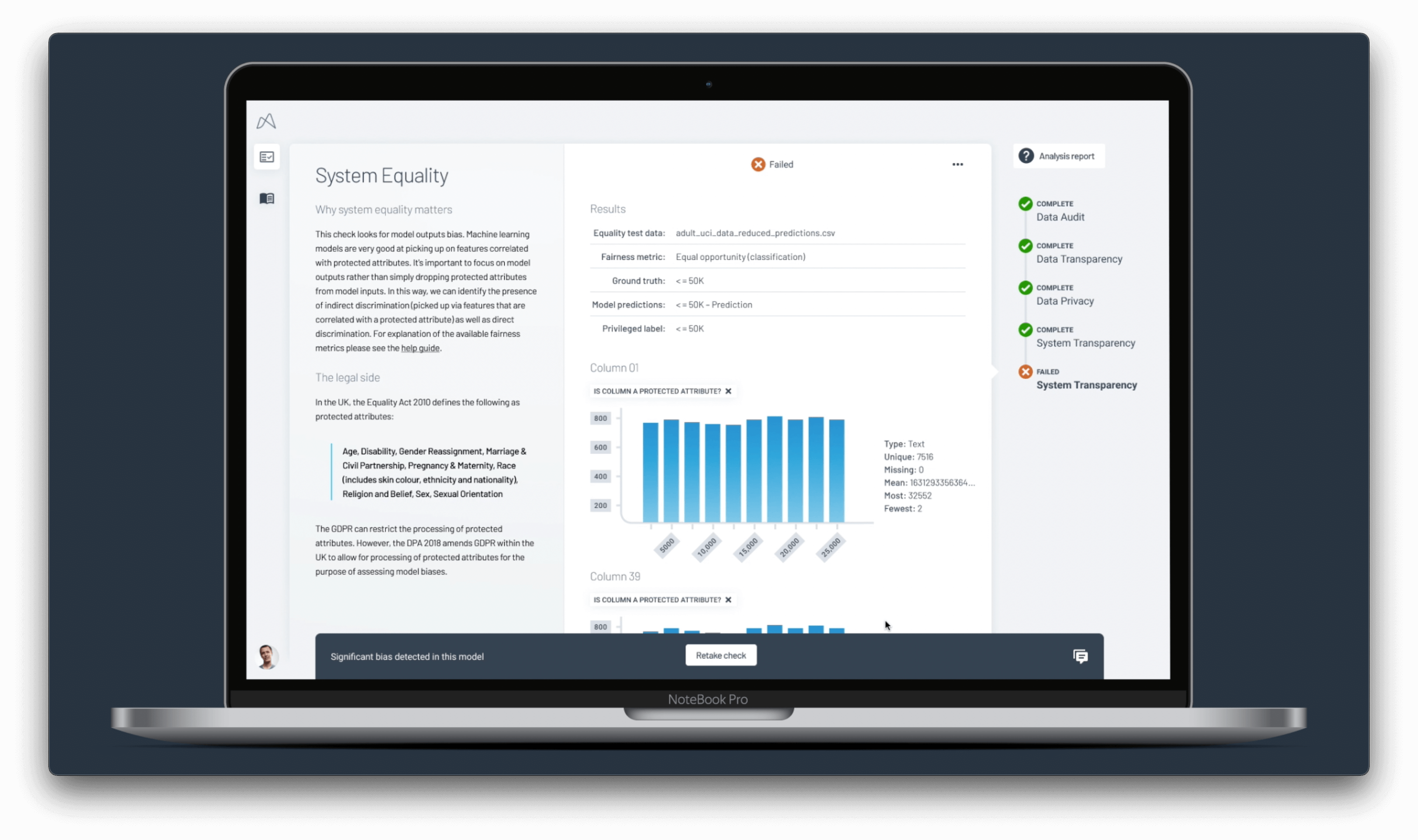

In high-stakes settings, it is vital that end-users, architects, and anyone collaborating with a system can understand how and why decisions made by AI are reached. Without this understanding, it is impossible to trust and rely on these decisions. Many approaches to explainability fall short, especially those where explanation capabilities are added on at the end as an afterthought. This often results in solutions that are not fundamentally designed with explainability requirements in mind, and therefore lack in both performance and transparency.

Mind Foundry considers explainability as a fundamental dimension of performance and integrates this into our models, solution designs, and implementations, intentionally avoiding particular architectures that do not lend themselves to in-depth understanding and exploration. This results in a holistic product optimised for what really matters.

In addition, we believe that explainability cannot be a one size fits all solution. End users in various situations have different contextual understandings and areas that matter to them and therefore require individualised explanations. While maintaining robust protections for privacy, our AI technology captures information about users, AI interactions and decisions, which are then synthesised into explanations and provenance, specifically tailored to our customers in a given context at the point of query.

Explainable AI in Government

One of the ways we have achieved this is through a partnership with the Scottish Government in building an explainable AI that aligns with their AI Strategy. Mind Foundry developed a framework powered by its intelligent decision architecture that allows technical and non-technical users to work with the system to understand how AI was used and impacted results. Additionally, providing the user with a system they can understand also allows for true human - AI collaboration to flourish while retaining control, oversight and most importantly, trust.

Learning about Explainable AI

An important question to ask when designing Explainable AI is, “Explainable to who?” An explanation that makes sense to a data scientist might have minimal value to a non-technical stakeholder of the system. To bring all potential stakeholders into a meaningful conversation of how to use AI responsibly in the real world, we’ve created the Mind Foundry Academy – a learning platform designed to provide the skills needed to effectively engage with AI in the real world.

With the Mind Foundry Academy’s industry-specific courses, users gain the practical knowledge to become and remain effective collaborators with AI. Material is tailored to suit those working in government, insurance, media, communications, energy, and non-profits, as well as providing a broader foundation to Machine Learning (ML) that is sector-agnostic. The course includes guest lectures and public speakers, live workshops, dedicated tutors, a forum for additional support and a certificate, with credentials, upon completion.

The best AI systems are holistic and involve feedback, inputs, and validation from numerous stakeholders. To that end, our courses work best when offered to entire departments, encouraging collaboration between colleagues to jump-start a department-wide digital transformation and many inter-departmental conversations.

In a continuously evolving world, we bring together the world’s best scientists, engineers, design-thinkers and more to tackle the most challenging problems across numerous industries in an explainable and responsible way.

Enjoyed this blog? Check out 'Explaining AI Explainability'.

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...

Industrial AI is increasingly coming to the fore in physical industries, but achieving measurable real-world impact requires careful consideration...