AI-enabled Acoustic Intelligence for Anti-Submarine Warfare

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

Why do we expect more from machines than we do from each other? We lean on machines for so much, yet when it comes to judging their performance, we seem to have a double standard. A human can fail, time and time again, and still be forgiven. But it’s not enough for a machine to consistently outperform a human - it has to be nearly flawless. Why is this? And is this the right approach?

How did we get here?

Some three-hundred thousand years of experience as homo sapiens has given us a series of heuristics and assumptions driven by the genetically-ingrained need to survive and reproduce. The past offers us the certainty of outcome - we think we have a good idea about what happened, and we have learned through trial and error what does and doesn’t work. Our inherited experience mostly passed on through word of mouth, has ingrained in us a "gut feel" idea of acceptable levels of risk and the parameters of human performance. The present, though slightly less comforting, offers both a reference window into the past and its certainty, as well as a glimpse into the future and potential uncertainty. It is this uncertainty that we struggle with. A lack of control over outcomes.

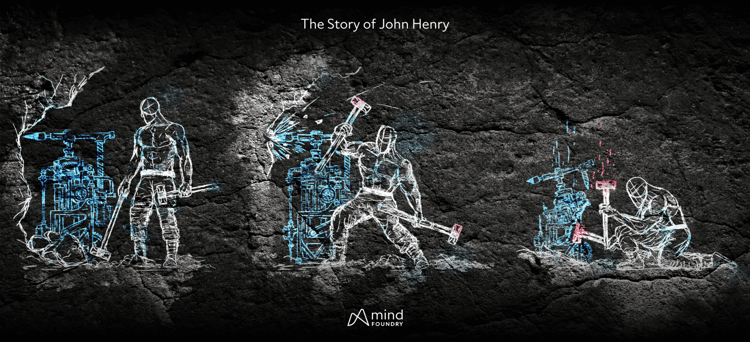

History is littered with examples of technological developments appearing to impinge on what it is to be human and what humans are good at. Take the early 1870s and the legend of John Henry outperforming the novel steam drill but dying in the process of attempting to show the superiority of humans over machines. Or in the early days of keyword searching when I set up an experiment using publicly available information on the internet to prove to decision-makers that web scrapers could identify an event far more rapidly and accurately than any human or collective of humans. In John Henry’s case, it was an attempt to maintain human dominance and protect jobs. In my case, it was an attempt to illustrate why relying on humans to do such a dull, repetitive task of trawling through near-infinite data sets in real time was something that could (and should) be done more effectively by machines.

The paradox is that, although the widespread adoption of technology is what has made us so successful as a species, that adoption is nevertheless fraught with obstacles, both real and imagined. In some cases, we are quick to adopt technology-enabled shortcuts where there appear to be few immediate consequences and then repent or unpick them at our leisure. It’s no bad thing that we don’t trust machines without hesitation or that communities of users like to adopt technology early in its development in order to find the edge cases and weaknesses.

AI and Machines operate at the heart of this uncertainty struggle, forcing us to question how we retain control over things that can now perform traditional human tasks markedly better than us. Not just that, but how do we avoid fixating and over-indexing on negative yet unlikely outcomes vs those that are more realistic?

Opportunity vs Risk

As humans, we tend to trust our own subjective understanding of risk, opportunity, and threat. The German psychologist Gerd Geigerenzer calculated that in the year following the attacks on September 11th, an additional 1,595 Americans died in road traffic accidents due to an upsurge in travel by road, six times more than the number that died in the aircraft. The Luddites responded to the emerging technology of mechanised knitting looms in the early 19th Century by rioting and breaking into factories to destroy the new contraptions, fearful of having their highly skilled profession undermined and the subsequent unemployment. The understandable fear of loss trumped the potential for gain.

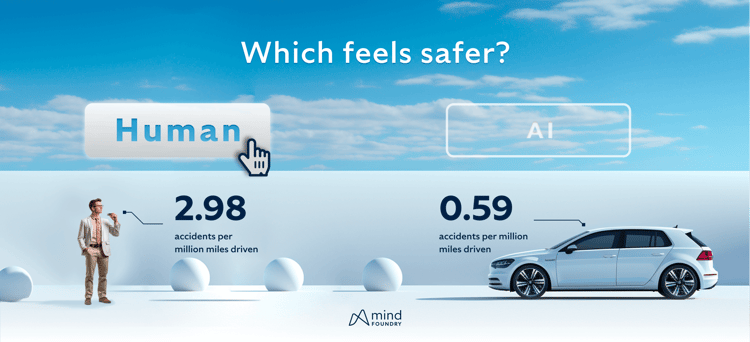

More recently, despite the evidence that in controlled environments, driverless cars are by several orders of magnitude safer than those driven by humans, fully autonomous vehicles are still many years away from widespread adoption and subject to far higher burdens of regulation and testing than the average driver. We are confronted with a mass of data that supports the relative safety of autonomous vehicles, and yet we still choose to focus on the worst possible outcomes. The ultimate conclusion is that it’s preferable to have any number of human-initiated deaths and injuries than one caused by a machine. Why is that? The answer is extremely complex, as one might expect, with many interwoven themes.

Determining What is “Trustworthy”

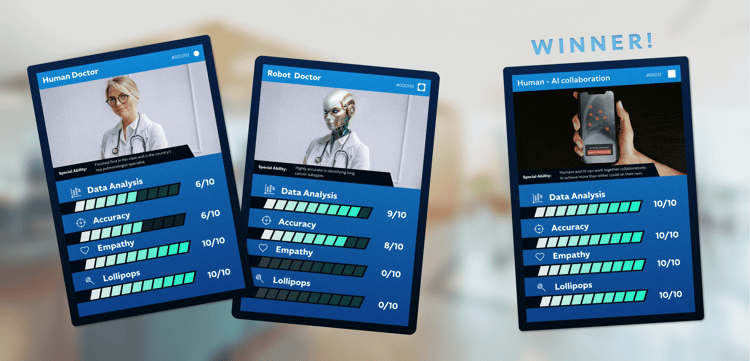

The first factor is that we are poor at determining how trustworthy a technology actually is. When confronted with a choice about whether or not to trust something, we resort to absolutes; yes or no - simple answers to complex questions. AI systems and machines lack essential human attributes such as empathy, emotional understanding, and contextual decision-making. This is both their super-power and super-fragility. While humans can incorporate emotions and complex contexts in their choices, AI algorithms rely instead on predefined rules and patterns.

An oft-cited example in the medical field is the use of AI-powered systems that can analyse medical data and assist in diagnosing diseases with greater accuracy than humans. However, what happens next in the discussion is we overextend, and soon the conversation switches to robot doctors, and then we discuss whether these systems might lack the empathy and compassion that human doctors bring to patient interactions, potentially impacting the overall quality of care. What started as the automation of data analysis turned into a discussion about the power we wanted to invest in machines.

If an AI system fails in one sensor system in Defence, then it could fail in all of them as the flaw is scaled. However, as the flaw is scaled, so is the solution, meaning it only needs to be fixed once to solve a problem many times over. Nevertheless, humans are famously difficult to scale because of capability, training, and experience differences. The radar operator that can identify a very weak low and slow signal amongst the noise reflecting back from a UAV might be subject to a number of variables. They could be extremely good, or lucky, at the start of their shift rather than the end or have seen that particular signal type many times before.

Who do we hold accountable when machines fail?

Humans have a tendency to overestimate their own abilities. Just look at how “virtually every driver” believes they are above average, regardless of the circumstances or data. Part of the problem is that we haven’t really worked out how to measure human effectiveness at a wide range of tasks, so we have no benchmark for measuring how a machine performs in comparison. For example, if we build a model with an accuracy of 0.84 - it’s right in its prediction 84% of the time. We are far more likely to spend time discussing how we get it closer to 1.0 rather than comparing its performance to that of humans in the same conditions. In many cases, the existing human performance might be significantly lower than 0.84, but we, as individuals, often fail to recognise our fallibility.

Furthermore, the absence of a moral compass in AI systems amplifies the need for human oversight and ethical considerations in ensuring responsible AI deployment. AI will struggle to navigate complex ethical dilemmas without a fundamental understanding of morality. Take self-driving cars as an example. While these vehicles could offer numerous benefits, such as improved road safety due to the removal of human driver error, they face challenging ethical decisions when faced with unavoidable accidents. Who should the car prioritise: the passengers, pedestrians, or both? These ethical considerations highlight the need for human involvement in order to guide AI systems toward making decisions that align with our collective understanding of what is right and wrong.

When applied to a Defence context, the moral dilemma increases exponentially in scope. There is fractious debate about the differences between autonomy and automation and how the application of these technological principles to weapons systems will change the nature of warfare. We can forgive a human for a genuine mistake, but if a robot makes a targeting error, it is inevitably held to a different level of accountability. This limitation leaves users questioning the reliability and effectiveness of AI systems.

Building Trust Over Time

Broadly, human trust is built on shared and lived experiences. Relationships and trust develop and grow between people as each learns to better understand and communicate with the other, and this then scales across populations and generations. It isn’t necessarily that we all trust each other all of the time, but we do trust that the rule of law or societal norms will regulate and align where appropriate. We have no such certainty or confidence with machines, even when the data suggest otherwise.

Through repeated human interaction, assertions and ideas are examined, and internal world views and perspectives evolve and adjust. With ongoing collaboration, mutual assistance, and understanding, trust appears within a group. The ability to converse on an ongoing basis, update opinions as they are challenged, and adjust the way we treat individuals based on experience allows us to understand and feel understood. This builds trust over time. It is precisely because these interactions are continuous and adaptable rather than transactional and clinical that we can grow to understand and trust others.

Many AI deployments lack the ability to be challenged or explain their predictions and fail to update over time to reflect new knowledge of a problem or user context. The feeling that AI predictions cannot be explained then leaves users unable to make clear judgements on their validity and exposes those users to the risk of error because the checks and balances we have learnt for collaborating with humans simply cannot be applied to an impenetrable AI.

It is clear that for AI to be trusted and held to more attainable or comparable standards to humans, it must be able to interface with the methods humans have evolved to build trust and how we learn compared to machines. Explanations and justifications, updating predictions in the face of error, and learning the individual context of users are key components of this. When integrating AI in our most challenging problem spaces, it is only by considering human factors and end-user experience as well as model accuracy that we can truly build trust and realise the full potential of these new technologies.

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...

Industrial AI is increasingly coming to the fore in physical industries, but achieving measurable real-world impact requires careful consideration...