AI-enabled Acoustic Intelligence for Anti-Submarine Warfare

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

4 min read

Alex Constantin

:

Updated on April 10, 2024

Alex Constantin

:

Updated on April 10, 2024

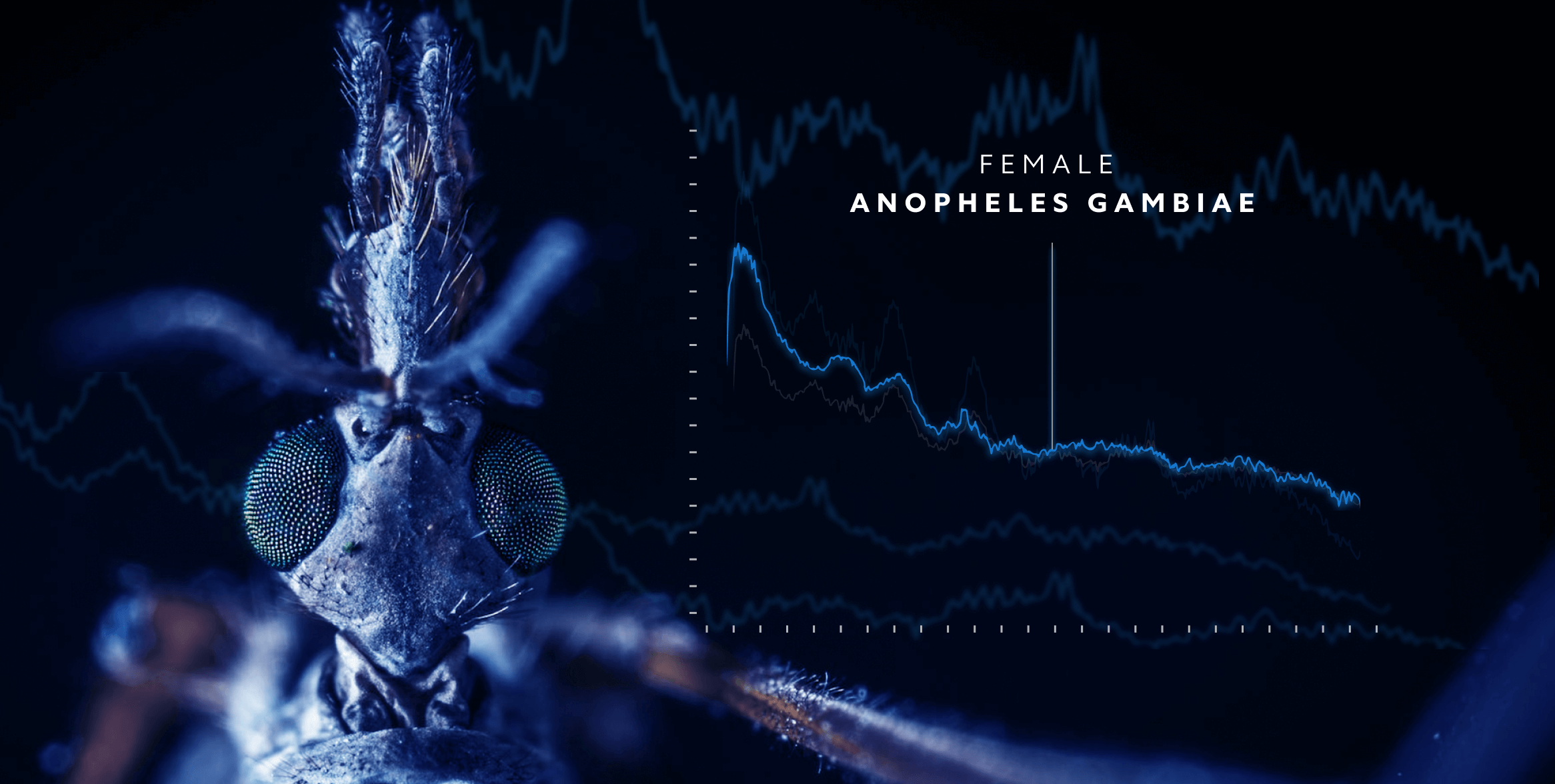

Helping the fight against malaria

Mind Foundry co-hosts HumBug 2022 as part of the ComparE challenge, bringing together the greatest minds to help solve one of the world’s most important problems. The goal of the project is to use low-cost smartphones to detect the characteristic acoustic signatures of mosquito flight tones, helping understand the presence of potential malaria vectors. The goal of the Mosquito sub-challenge at ComparE was to develop algorithms to detect mosquitoes within real-world acoustic field data.

Mind Foundry's University of Oxford connection

As a spinout of Oxford University, Mind Foundry retains a close connection to university research, allowing strong collaboration and shared resources. Not only does this provide a natural conduit for commercialising and scaling academic research, but it also provides Mind Foundry researchers with cutting-edge scientific and industrial challenges and access to world-class domain expertise.

The Problem

In 2020 alone, malaria caused 241 million cases of disease across more than 100 countries, resulting in an estimated 627,000 deaths. There are over 3500 species of mosquito in the world, found on every continent except Antarctica. Mosquito surveys are used to establish vector species' composition and abundance and, thus, the potential to transmit a pathogen. Traditional survey methods, such as human landing catches, which collect mosquitoes as they land on the exposed skin of a collector, can be time-consuming, expensive, and limited in the number of sites they can survey. These surveys can also expose collectors to disease. Consequently, an affordable automated survey method that detects, identifies and counts mosquitoes could generate unprecedented levels of high-quality occurrence and abundance data over spatial and temporal scales that are currently difficult to achieve.

The Goal

HumBug is a long-term research project, originally supported by Google and the ORCHID project, and currently via the Bill & Melinda Gates Foundation. The HumBug team has developed a novel mosquito survey system based on the detection of a mosquito's acoustic signature. The aim of the project is to detect and identify different species of mosquitoes using the acoustic signature (sound) of their flight tones captured on a smartphone app. This information can then enable targeted and effective malaria control through a better understanding of mosquito species abundance without incurring any risk to those conducting the surveys.

Mind Foundry, in partnership with the University of Oxford team, has been particularly involved in the data analysis aspects of the project, from low-level audio signal processing to analysis of the relationships between mosquito wing morphology and beat frequency to mosquito detection algorithms. The goals of the HumBug project align well with the philosophy of Mind Foundry - forming robust, principled solutions to a high-stakes application with the potential to affect human lives at the scale of populations.

The Competition - ComparE 2022

This year, the HumBug project collaborated with ComparE 2022, developing the Mosquitoes Sub-Challenge. The goal of this challenge was to develop algorithms to detect mosquitoes within real-world acoustic field data. The data was recorded in demanding conditions that include rural acoustic ambience, the presence of speech, rain, other insects, and many more acoustic background phenomena.

The Engineering Challenge

This year’s HumBug challenge has been the most demanding so far, with the inclusion of large tracts of field data. The challenge data set has been laboriously curated from over six years of data collection, which has been split into train, development, and test. All test data is recorded in people's homes from various mosquito intervention solutions, such as bednets.

Sensitive Data

As some of the challenge test data set is recorded in people’s homes, the nature of the data is sensitive. We neatly avoid making the test data public by designing containers for the data, which allow the evaluation of submissions to the challenge without exposing the data. This allows data privacy preservation and removes the possibility of model tuning with knowledge of test data statistics.

The Problem to Solve

Both training and development data were made available to participants to develop models. Our problem is, therefore, one of finding a robust, secure environment that allows submission evaluation on the test data. We posed two major questions:

How do participants upload their submission code?

How do we build and run their models as seamlessly as possible?

Our solution was to build a Kaggle-like environment from scratch. But with the challenge kicking off in less than a week, there wasn't much time!

Our Solution

Two Machine Learning Research Software Engineers from Mind Foundry, Alex and Andreas, were tasked with working together with the team at Oxford University to develop a system that would allow participants to submit their code and get their submissions evaluated.

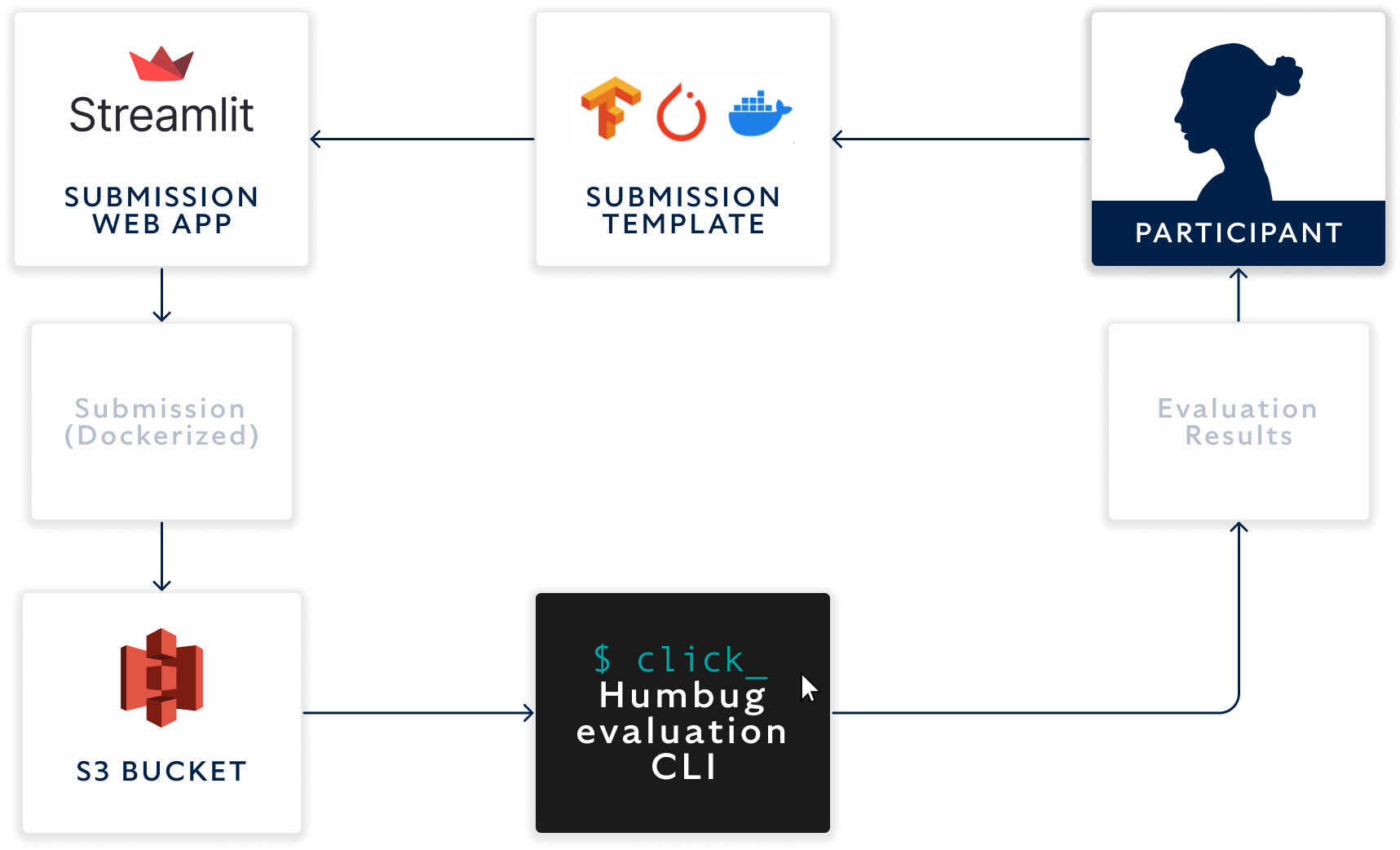

We considered many solutions before settling on a system composed of the following components (as shown in the figure above):

This serves as a starting point for participants, allowing them to easily plug in their own processing & modelling code

Provides versions for both Pytorch and Tensorflow, each with a baseline example model

Contains a batteries-included Docker setup, with working train and prediction scripts

A submission web app

A very basic web application where each participant can upload up to 5 submission archives

Authentication is performed via a password provided upon registration for the challenge

The Humbug evaluation CLI, (known internally as humcli)

A command line interface that automates all tasks involving the evaluation of challenges:

Listing the submissions of all participants

Downloading, building and running inference on submissions

Saving the outputs and tracking which submissions have been evaluated

We worked to ensure that all components work seamlessly together to automate the submission and evaluation process as much as possible.

What We Learned.

Manual Labour: Even though most evaluation steps have been automated, some parts still require human-AI collaboration. Examples include triggering the evaluation and communicating potential errors and results back to the team who created the submission.

Expense: Running and managing virtual machines with GPUs that can run these models is expensive. We’ve been using g4dn.xlarge instances, equipped with NVIDIA T4 GPUs (16GB of VRAM).

Preventing test information leakage: While we knew we were dealing with world-class teams submitting their work, we did take sensible precautions to prevent testing environment information leakage, like restricting network access and file system access within Docker.

Good scientists are also good engineers: To our joy, participants experienced minimal to no issues familiarising themselves with the Docker-based submission.

Hosting ML Competitions is Tough.

At the beginning of this journey, we considered the main problems to be ones of data privacy. We very quickly came to realise that an entire MLOps infrastructure was required. Luckily, the team at Mind Foundry are experts in the field, and are always up for a high-stakes challenge. Stay tuned for the next time we host a challenge - for sure, we will turn the lessons learned from this one into improvements for the next!

Alex and Andreas would like to thank Ivan Kiskin, of the People-Centred AI institute at the University of Surrey, and Mind Foundry co-founder Professor Steve Roberts, University of Oxford, for his work in coordinating the collaboration with us. Working with collaborators who are helpful, responsive and clear in their requirements makes all the difference.

Additional Reading.

Interview with Davide Zilli: learn more about some of the initial motivations and early challenges for applying Machine Learning to high-stakes applications, like the fight against malaria in this interview with Mind Foundry's VP of Applied Machine Learning, Dr. Davide Zilli.

Mosquito Case Study: learn more about the problem, the solution, and the initial results of this high-stakes application of AI. Also includes links to 4 pieces of research Mind Foundry has published in this space.

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...

Industrial AI is increasingly coming to the fore in physical industries, but achieving measurable real-world impact requires careful consideration...