AI-enabled Acoustic Intelligence for Anti-Submarine Warfare

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

With its unparalleled ability to extract insight from burdensome volumes of raw data, AI is more than an opportunity to innovate. Nevertheless, this doesn’t make it the answer to every problem involving data. Even when applied to the right problems, there is another equally critical consideration when attempting to realise AI's impact. Namely, ensuring that models which perform well in a scientific or test context can be operationalised to give a timely and positive impact in the real world.

Going From The Lab to The Real World

Corporate investment in AI has skyrocketed in recent years, growing from $12.75 Billion in 2015 to $91.9 Billion in 2022. Despite this, only 54% of AI projects make it from the pilot stage to deployment. Instead of being operationalised, almost half of all projects remain archived in experimentation limbo. In especially complex and challenging environments like those in defence and national security, the number of failed AI projects is undoubtedly even higher. The root of this is that many organisations mistakenly view AI as the goal, and the misplaced priority of “doing more AI” without an accompanying deployment strategy causes an ever-widening chasm between what works theoretically and what can deliver impact in the real world.

We see this manifesting in several ways. AI models may be built to solve a theoretical problem, such as identifying a signal and matching it to a catalogue of known examples. In the real world, however, they are expected to solve the adjacent but fundamentally different problem of identifying signals they’ve never seen before. AI could also be expected to detect a signal of interest in the maritime domain, for example, but in practice, as we move from a demonstration problem to a real problem, there are challenges with data governance, and the required data cannot actually be sent to where the model is hosted. Ultimately, a model that performs adequately in lab testing ends up being totally unfit for purpose when addressing the problem it was(n’t) built to solve.

The Fallacy of Operationalisation as an Afterthought

There are significant practical challenges that come with using AI in complex problem spaces, and if these challenges are not considered at design time, then we end up in a situation where attempts are made to retrofit a highly complex model with makeshift, post-hoc approaches to cover the deployment gaps. Frequent attempts to mitigate LLM hallucination and misuse with increasingly complex guardrails are a prime example of this in today's world.

A system should not be designed for hypothetical scientific metrics, with deployment considerations coming later. Instead, the better approach is to design for deployment. Start by considering all relevant requirements the system must cater to and continually reassess these throughout the development and deployment cycle. This then means that performance becomes not just a measure of accuracy or precision but also deployment infrastructure, explainability, user experience, transparency, stability, adaptability, and many other factors that are equally significant in their own right.

These considerations cannot be “set and forget” when scoping a project. The complexity of AI means that it is sometimes nearly impossible to understand future requirements without extensive discovery. Project goals and the (multifaceted) performance standards to which AI systems will be held must be reviewed continuously by individuals with relevant expertise.

.jpg?width=2519&height=1383&name=The%20Fallacy%20of%20Operationalisation%20as%20An%20Afterthought%20(1).jpg)

When setting standards for performance in a lab setting, there is often insufficient consideration for how those standards will translate to the system’s use in a real-world situation. Once a system passes its “experimental” phase, it is commonly handed off to a totally separate operations team. This team then has to deal with all the baked-in choices made in its scientific design, accurate or otherwise. This subsequently introduces significant and unnecessary roadblocks to deployment that could be avoided with more joined-up thinking.

In all high-stakes applications, it’s paramount that decision-makers retain accountability and explain how and why they took certain courses of action. This includes the AI that they might use to inform these decisions. If AI is built without consideration of explainability and governance requirements, it can have devastating consequences. It is a fundamental necessity to consider who will use the system. What level of assurance will they need that the AI is functioning correctly? How will they sign off on decisions, and what kind of explanation do they need? The bigger the dataset, the more complex the model, and the less likely transparency and explainability will occur by default. These characteristics must be priorities, not afterthoughts.

The Pathway to Operationalising AI

A car manufacturer designing a luxury saloon would set a particular performance standard in testing for aerodynamics, speed, power, comfort, and aesthetics, prioritising the latter two over the others. All considerations are incorporated into the design phase because, for example, the size of the engine will impact the shape of the car and its subsequent performance in the wind tunnel. If building a race car, their priorities would doubtless be different. Either way, the system is designed with the end-user and their perception of performance, and the design process is optimised accordingly.

Just because AI is an advanced science doesn’t mean it should be treated differently from any other operational technology. In fact, treating it like a science project is the root of the problem. Instead, the correct approach is to dedicate a single evolving team that takes a capability from ideation to operationalisation, avoiding handoffs. To understand the problem, this team needs to understand the data, the technical feasibility, the integration environment, the user context and need, and the governance and assurance process. This does, of course, result in a highly challenging problem to solve, but the alternative is failing to consider critical elements at the earliest possible stage, which will result in misplaced assumptions being baked into the system.

The team working on the solution must have the required end-to-end experience to assure rapid success or failure and subsequent cost-effective iteration. The most effective way to accelerate designed systems to deployment is to consider them in product terms with a user-centric, design-thinking perspective. Product designers and owners will consider deployment from the end user and ideal experience backwards. Scientists will consider deployment from the science and technical feasibility forward. This also means that experts from the end user group, systems integrators, and key decision makers must also be at the table to ensure that complex interconnected dependencies are considered when making important choices throughout a development cycle.

By tackling the problems from the perspectives of science, users, engineers and other stakeholders, an operationally realistic and impactful solution is far more likely to be delivered. Teams need to favour agility over deep and unwavering certainty and remember they are building a solution to help solve problems that are not yet well understood. Designs must be refined as new information is discovered and complications arise. Preparing for this eventuality from the start enables the delivery of a system fit for purpose on completion.

The Power of a Multi-Organisation Partnership

In challenging problem spaces, the signal-to-output workflow is often complex. Whether that is a sound or image detected by a sensor, processed digitally and presented to a human, or data created by a human and presented back to other humans, there are multiple steps in the process. No single hardware manufacturer, end customer, consultancy, data engineer, or, indeed, AI company alone will have all the answers.

The realisation of this fact highlights the power and value of deep partnerships. With a mutual understanding of relative skills and awareness of strengths and limitations, these partnerships grow over time as organisations work together and learn how to do this better. When incorporated into the design and development processes, the combination of diverse perspectives gives a total far greater than the sum of their parts and massively increases the likelihood that the designed system will make it to deployment.

Creating solutions that maximise value and impact for the organisations they serve must start at the earliest stages of problem and system design. By beginning with the end goal in mind, we can design for what truly matters and avoid the rabbit holes of innovation-led, overly science-focused, or operationally unviable ideas. Once deployed, the challenge of maintaining a system’s relevance and aptitude for ongoing use is a continuous one - models must be monitored and kept up to date. Most machine learning models degrade over time as the world changes and new data is introduced, and this needs to be measured carefully to ensure remedial action can be taken at the precise moment it is needed.

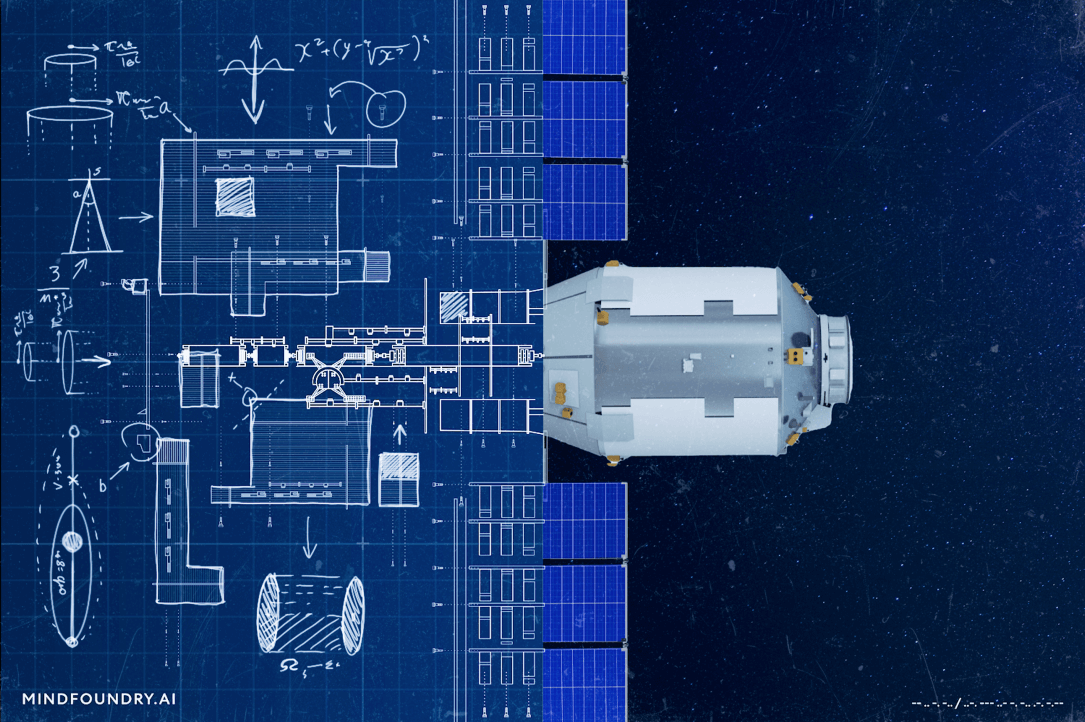

The complicated nature of AI and the ever-changing problem spaces in which it is used mean there is no precise blueprint for success. Approaching AI adoption correctly, with the end goal in mind and with the involvement of partners, is a critical first step toward building something truly impactful. With more of this operationally focused attitude, the scope of problems that AI can solve will only increase, and the benefits will be felt across society.

Enjoyed this blog? Check out explaining Why AI Isn't the Answer to Every Data Problem.

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...

Industrial AI is increasingly coming to the fore in physical industries, but achieving measurable real-world impact requires careful consideration...