AI, Insurance, and the UN SDGs: Building a Sustainable Future

Mind Foundry has been working alongside Aioi Nissay Dowa Insurance and the Aioi R&D Lab - Oxford to create AI-powered insurance solutions whose...

4 min read

Mind Foundry

:

Dec 7, 2023 1:09:44 PM

As an industry driven by data and complex algorithms, the insurance sector stands at a crossroads where transparency and explainability in pricing models are becoming more than just best practices—they're becoming imperatives. As the tools and practices for creating Explainable Artificial Intelligence (XAI) become more accessible and robust, insurers will use them to deploy trustworthy, Responsible AI at a scale that stays ahead of regulations and delivers a competitive advantage.

What Is Explainability?

Explainability in the field of AI refers to methods and techniques that make the results and workings of AI systems understandable to humans. Unlike technical terms like transparency and interpretability, explainability tends to deal with human understanding. As Oxford Professor Steve Roberts says in a previous blog on Explainability, “It’s the ‘aha!’ moment that comes when the real meaning of something is grasped at an intuitive level. It’s being able to transfer all that data into something which you can almost feel in your stomach as you get your head around it all and eventually end on an insight like 'Oh, of course… That makes sense!'". XAI is crucial in contexts like insurance pricing, where understanding how AI models make decisions is necessary to know if they impact fairness, comply with regulations, and establish trust with customers. However, since each human is different and has a different capacity for understanding, what constitutes explainability varies significantly based on one's technical background and position within an organisation:

For Technical Professionals (e.g. Data Scientists, AI Researchers): They view XAI as involving specific algorithms and techniques that make the complex decision-making processes of AI models transparent. This might include methods to visualise the feature importance in predictive models or techniques like SHAP (SHapley Additive exPlanations) that explain the output of machine learning models.

For Actuarial Professionals: Pricing models must deliver profit to the insurer over a long lifetime of economic market uncertainty. Layering in complex AO models can introduce further extreme uncertainty if this cannot be explained to the teams looking after the insurer's balance sheet and P&L.

For Business Professionals (e.g. Executives, Managers): They may interpret XAI in a broader, more application-oriented way. For them, XAI is about ensuring that AI-based pricing models are transparent, fair, and comply with industry regulations. Their focus is more on the governance and policy implications of XAI – how it impacts customer relations, meets legal standards, and integrates with business strategies.

For Regulators and Compliance Officers: Their interest in XAI lies in its ability to demonstrate that AI-driven decisions, such as those determining insurance premiums, are made fairly and without bias. For them, XAI is a tool to ensure accountability and regulatory compliance in AI implementations.

For End Users (e.g. Customers): They generally seek simplicity and clarity in XAI. To them, it means receiving clear, understandable explanations for why their insurance premiums are priced a certain way, ensuring transparency and building trust.

Often, the “aha!” moment of explainability for customers has little to do with AI and a lot more to do with macroeconomics. When they ask why their renewal price went up this time, they don’t want you to hand over several hard drives of impossible-to-read data: they’d rather you tell them that it’s because inflation has caused the cost of car parts to go up throughout society.

In summary, XAI bridges the complex inner workings of AI models and the need for clarity and trust among users and stakeholders. The depth and technicality of its understanding vary depending on one's role and expertise, ranging from highly technical algorithmic explanations to broader concerns about macroeconomics, fairness, transparency, and regulatory compliance.

Why are Explainability and Transparency important in insurance pricing?

The first reason for prioritising explainability is regulatory compliance. Pricing managers have to understand their own pricing models in order to trust that they can use them and to know that they are complying with regulations. This is critical in an environment where regulatory bodies, such as the UK's Financial Conduct Authority (FCA), are increasingly vigilant, with new laws being introduced at a rate not seen for decades. One such example, the Consumer Duty, will have far-reaching implications for how insurers use AI in areas like insurance pricing.

The second reason is sustainable profitability. If the model combinations exceed a human team’s ability to understand the overall effect on profits, then the insurance enterprise is imperilled.

The third, equally important aspect is consumer duty. Though we, as a civilisation, are still at the beginning of our journey with AI, as consumers learn more about AI, they naturally want to learn more about how they are personally affected by it. Insurers need to be able to explain this in terms that are easy to understand and meaningful. To be proactive, it's crucial for insurers to prioritise explainability now so that they can be ahead of cultural shifts and consumer duty expectations.

Balancing Sophistication with Clarity

The evolution of pricing models, especially with the introduction of AI and Machine Learning, has made the task daunting yet essential. Traditional models, like generalised linear models, offered some level of transparency, but as insurers have moved to more sophisticated models like gradient-boosted models (GBM), the challenges have intensified. For example, when training a pricing model, you're going to go through a number of different iterations and experiments. Being able to track the results of those experiments is crucial for data scientists who will want to ask questions like, “What hyperparameters and features did I use? What was my F1 score for that run? What was a particular loss function?”

Automating the Explanation Process

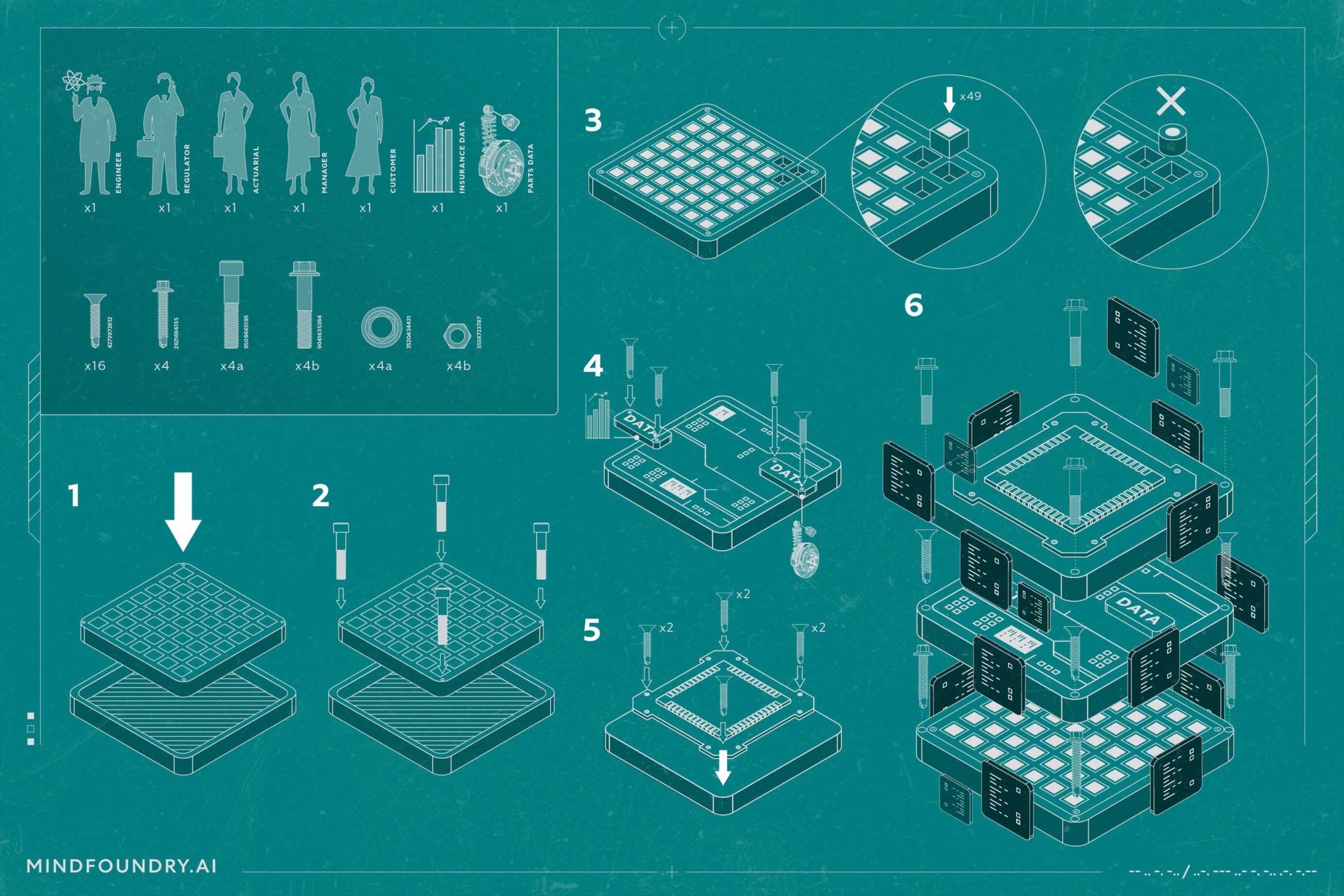

Increasingly sophisticated pricing models provide insurers with a competitive advantage, but providing XAI for these models is time-consuming, often taking close to 50% of a team’s time. As organisations adopt AI and scale the number of models in operation, automating the explanation process will become crucial to every insurer’s technology stack.

Though few providers today can meet the challenge of providing this type of automation, it is becoming vital in an era where large data science teams spend considerable time ensuring transparency and clarity in their models. By automating this process, insurers can enhance efficiency and provide better answers to consumers.

We believe insurers will quickly see explainability as a competitive edge and key differentiator. The more transparent and automated an insurer’s processes become, the more time they can devote to developing new, effective models. Speed to market is positively impacted with new models delivering competitive advantage more quickly.

Balancing Transparency with Competitive Edge

The journey towards more explainable and transparent insurance pricing models is not just a regulatory necessity but also a step towards building greater trust with consumers and stakeholders. The path forward involves balancing sophistication with clarity and innovation with risk, and automating the explanation process with AI-assisted explainability techniques, and leveraging AI responsibly. This paradigm shift is not merely about compliance but about forging a path that aligns with evolving consumer expectations and the ethos of fair value. As the industry continues to evolve, those prioritising explainability will likely find themselves at the forefront, not only in compliance but in customer trust and market competitiveness.

At Mind Foundry, explainability has always been a fundamental part of our DNA. If you are ready to enhance your pricing with the power of AI explainability, then take the next step by learning more about how this can benefit you by reading our 7 practical steps for AI governance in pricing, or connect with our experts. Let's explore the possibilities together and bring clarity and understanding to your pricing processes, creating a more seamless and valuable experience for your customers.

Enjoyed this blog? Check out our piece on "Innovations in Explainable AI"

Mind Foundry has been working alongside Aioi Nissay Dowa Insurance and the Aioi R&D Lab - Oxford to create AI-powered insurance solutions whose...

Local authorities need to support their funding requests with high-quality data. The problem is that they can't obtain this data at the required...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...