AI-enabled Acoustic Intelligence for Anti-Submarine Warfare

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

5 min read

Mind Foundry

:

Updated on December 1, 2025

In the previous two blogs in the series, we addressed what makes machine learning models trustworthy and how machine learning models fail. This blog is focused on how machines learn and one particular approach called meta-learning, which enables machine learning models to improve over time such that they can generalise to perform related but distinct tasks. We will look at how humans learn compared to machine learning, what meta-learning is, and how meta-learning can be done responsibly.

How do humans learn compared to Machine Learning?

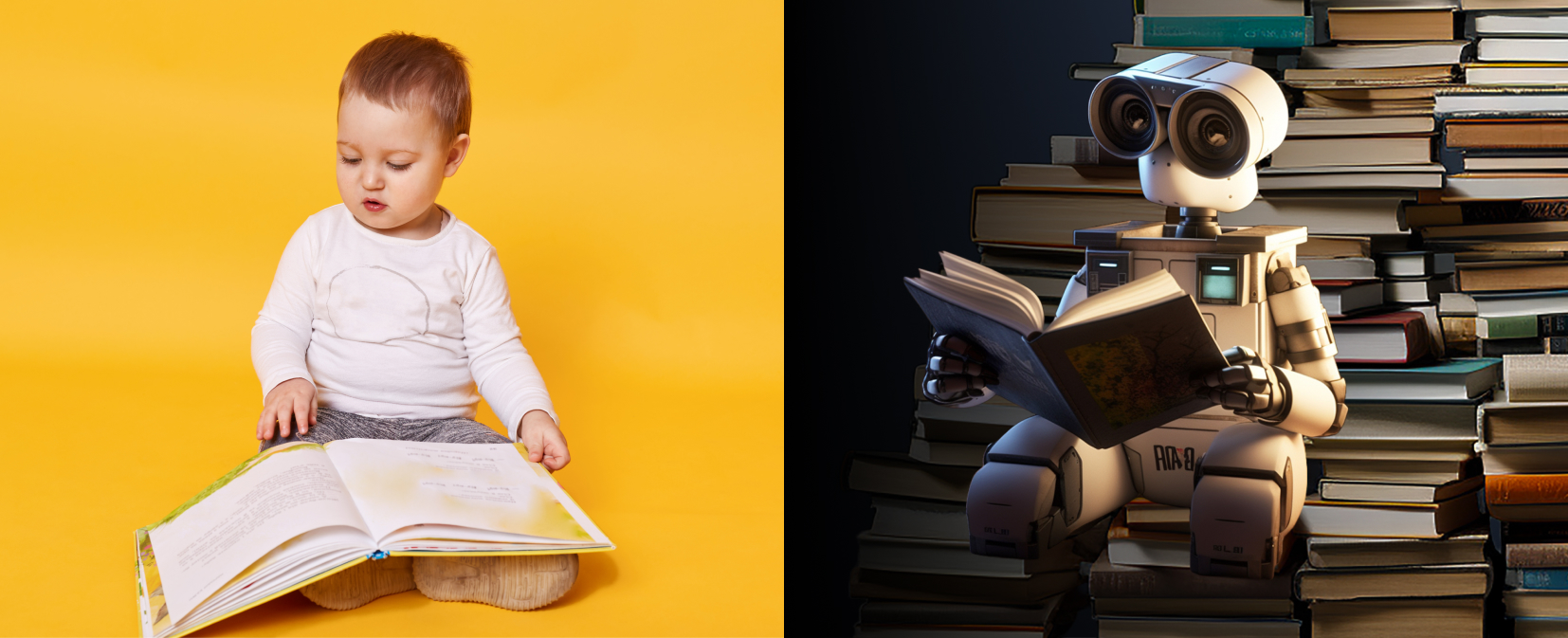

How humans learn is a critical question. It is, of course, directly relevant, as artificial neural networks are both named after and influenced by the human brain. According to Neuroscience professor Stanislas Dehaene, babies are born with in-built knowledge, hardwired due to millions of years of evolution. This in-built knowledge allows newborn babies to understand the basic laws of gravity and the ability to recognise the difference between humans and inanimate objects. While babies have a strong instinct for language, they are not born knowing a full language and, instead, must learn it. Why? The advantage of learning is that it allows better adaptability to a specific environment. By needing to learn a language, the baby can better mimic specific sounds, accents, and colloquialisms. Dahaene argues that human learning requires building an internal model of the external world, minimising errors, and exploring multiple possibilities with optimised rewards to reinforce learning. Even small species, such as ants and worms, exhibit these learning behaviours (to various degrees) and are born without complete knowledge.

The advantage of human learning versus machine learning is that humans are more data efficient. It has been estimated the average French child hears about 500-1000 hours (20 - 42 days) of speech per year. It is estimated that in the Tsimane, an indigenous population of the Bolivian Amazon, children only hear 60 hours of speech per year (2.5 days), and yet this does not hinder them from becoming excellent speakers of the Tsimane language. In comparison, large language models (LLMs) have historically needed much more data. For example, LLaMA 2 (Meta’s latest LLM) was trained on approximately 19,600 years worth of spoken language. The advantage of an LLM is that it will have broader knowledge than any one person or even groups of people, but the access and application are less flexible and won’t generalise as well as humans.

This concept is encapsulated by ‘one-trial’ learning, where humans can learn something new on a single trial. Humans can take one piece of new information, relate that information to other past experiences, and better interpret or use this new piece of information. For example, a human can learn a new word and, in ‘one-trial’, conjugate it correctly based on how they have previously learnt to conjugate other words in that language. In order for a machine learning model to be able to conjugate a new word correctly, the relationship between (1) the new word, (2) existing words it already knows, and (2b) their conjugated variants must be learned. Once this relationship has been learned, the machine learning model can then be used to learn to conjugate the word correctly. Evidently, the machine learning model has a much more laborious way of learning how to use new words compared to the human brain.

.jpg?width=820&height=413&name=What%20is%20meta-learning_%20(1).jpg)

What is meta-learning?

The word ‘meta’ has a Greek origin and refers to ‘the level above’. For example, metaethics is a branch of philosophy that is not concerned with the fundamental questions of morality (for example, ‘what is right and wrong?’) but with questions one level above, such as where moral values originate from or whether there are any objective moral facts.

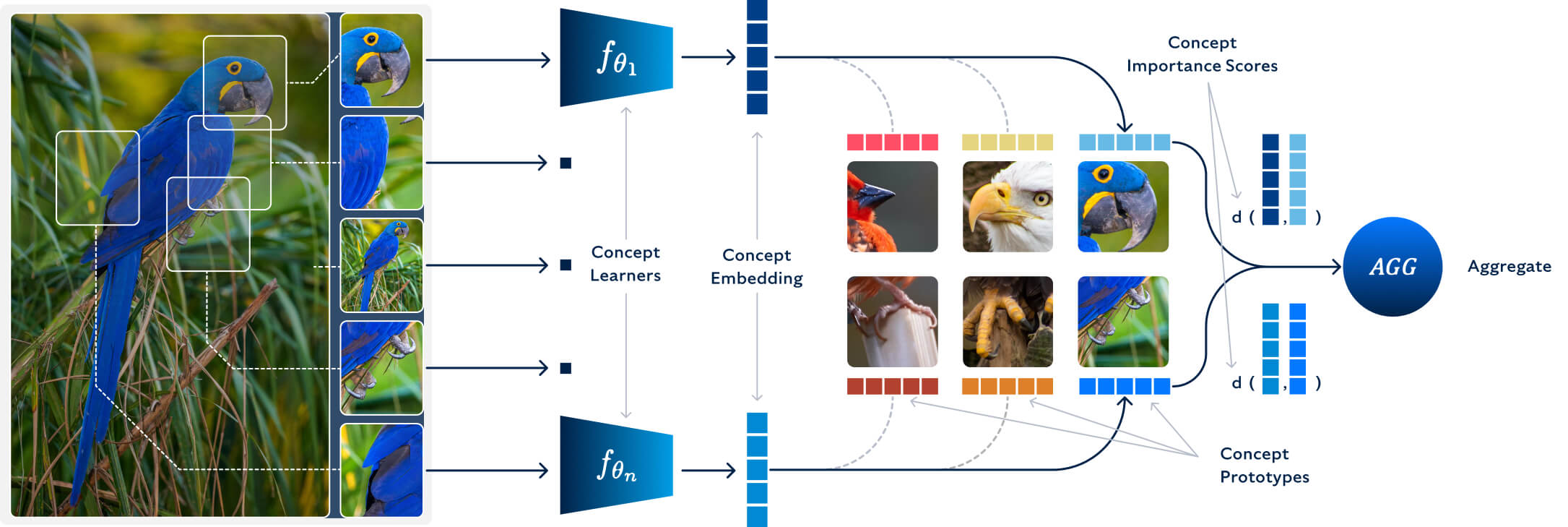

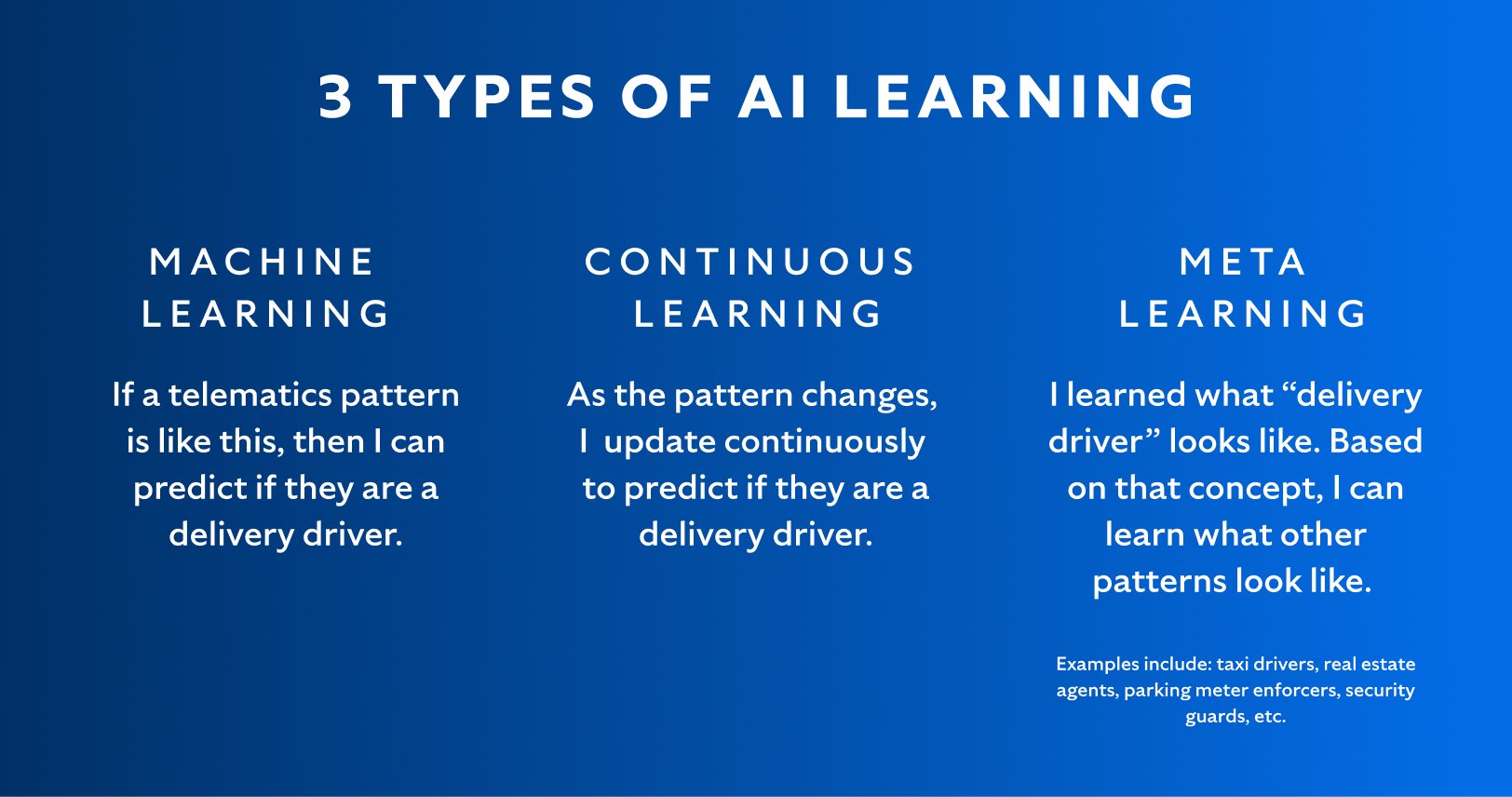

Meta-learning in machine learning refers to algorithms that learn how to learn and optimise their learning process and, therefore, are operating at this higher level of abstraction. This typically includes algorithms that can combine predictions from other machine learning algorithms and learn from the output of other algorithms. Meta-learning also includes algorithms that learn ‘how to learn’ across related yet distinct tasks – this is referred to as ‘multi-task’ learning.

Broadly speaking, machine learning algorithms learn from historical data and create predictions based on patterns found in the data. Meta-learning is focused on learning about learning (when to learn, how to learn, and what to learn). Meta-learning algorithms learn from the machine learning algorithms that have been learnt from historical data and can make predictions based on the predictions made by other models.

The critical difference between meta-learning and a traditional machine learning algorithm is that while a machine learning model may continuously improve and increase its capability in detecting the ‘classes’ it has been trained on, a meta-learning approach can help it to extend beyond the classes it was trained on, to classify new behaviours.

One type of meta-learning is also known as transfer learning. Instead of learning from scratch, the model builds domain-specific memory and adapts what it has learnt from one domain, such that it can be used for a related task in a different domain. For example, Google uses transfer learning in Google Translate. The insights from ‘high-resource’ languages, i.e. languages (such as French and Spanish) that have a lot of available written or spoken data in them, can be used for ‘low-resource’ languages (Sindhi, Hawaiian), which do not have much available data in them.

There are, of course, known limitations to this approach: Google’s low-resource languages and their translations can be riddled with gender bias and other social biases. As such, it is paramount to ensure that responsible AI practices are embedded into meta-learning techniques.

While humans are still more data-efficient than machines, meta-learning looks to make the process of learning more efficient in algorithms. It can be a compelling technique to solve challenging business problems, especially where there is not much readily available data for specific classes in a domain. Responsible AI will be critical to ensure the outputs of meta-learning algorithms behave as intended.

How can meta-learning be done responsibly?

The potential for meta-learning is vast. It must, however, be developed in a responsible and human-centred way. The key to utilising meta-learning techniques is combining reliable data quality with humans closely collaborating with the ML system. In our last two articles, which focused on model trustworthiness and how models can fail, we emphasised the need for:

Interpretability and explainability

Fairness and bias tests

Monitoring the model and detecting data drift

Observability of the predictions and the overall system

In addition, considerations for privacy, safety, and robustness would all play a large role in ensuring the responsible use of meta-learning techniques. Learning based on more limited data may aggravate the risks of learning from poisoned data or incorrectly labelled data. As such, the care needed to ensure that a model continues to learn responsibly cannot be overstated.

Aside from these algorithmic and data design choices, there are also solution design choices that will help to uphold responsible machine learning. For example, the humans interacting with the system should have a clear understanding of where the model may be limited and understand where their own human judgement will outperform the machine learning system’s judgement. There will be specific use cases where human judgment must be prioritised over the recommendation of the machine learning system.

The potential upside to meta-learning is that, at its core, it can also provide a more computationally and data-efficient way of learning new tasks, which contrasts with the copious amounts of data needed to train LLMs (as discussed above). Meta-learning may be an attractive and more sustainable approach for some business problems.

To close, learning is a fundamental aspect of effectively being able to adapt to a specific environment. One machine learning technique, meta-learning, presents a useful paradigm to solve business problems with less data than other machine learning models. Developing these algorithms responsibly is of paramount importance.

Enjoyed this blog? Check out the rest of the series.

From detecting hidden threats to defending critical underwater infrastructure, Anti-Submarine Warfare (ASW) is a cornerstone of national security. AI...

The UK-USA Technology Prosperity Deal sees overseas organisations pledging £31 billion of investment into UK AI infrastructure. As AI investment...

Industrial AI is increasingly coming to the fore in physical industries, but achieving measurable real-world impact requires careful consideration...